Azure Remote Rendering (ARR)

For users of Microsoft HoloLens 2

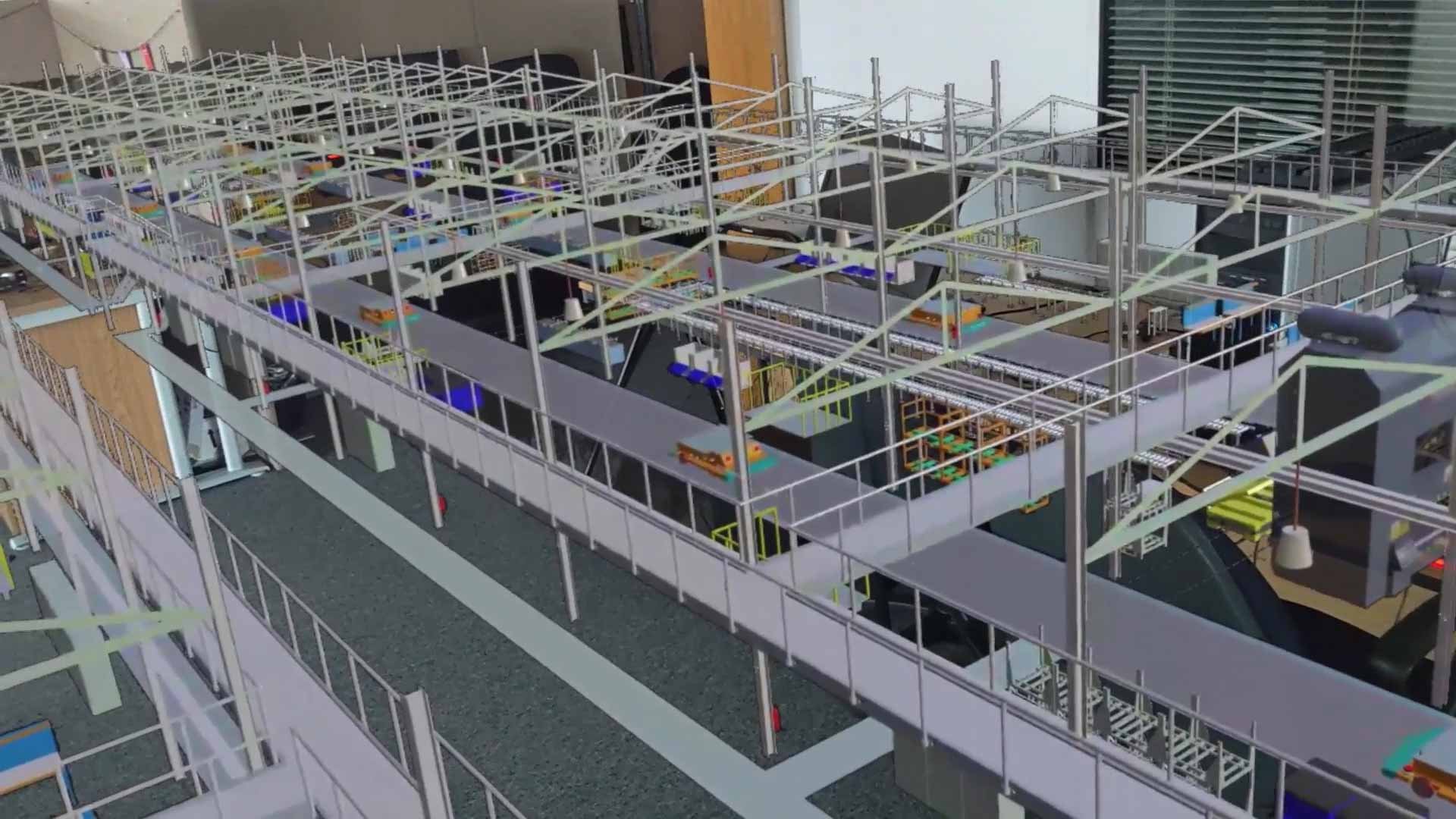

Removing Access Constraints for Large Datasets and High-Quality Rendering

Combine the power of Microsoft ARR, and the advanced data preparation and optimization capabilities of the Theorem Visualization Pipeline, part of the TheoremXR product suite, and large datasets and high-quality rendering constraints are removed.

As a member of the Microsoft Mixed Reality Partner Program (MRPP) we have worked closely with Microsoft to ensure the Azure platform seamlessly integrates with our pipeline and experiences, enabling us to deliver innovative, class leading, collaborative, mixed reality experiences for Visualization, Design Review, and Factory Layout for the HoloLens 2.

Driving Innovation Forwards

With enhanced data sharing and supplier collaboration, users are taking advantage of the new spatial computing and visualization capabilities of HoloLens. Our Engineering and Manufacturing customers are driving our ARR focus forwards, helping them to improve their design quality, delivered by world class innovative engineering and manufacturing products and services using HoloLens and ARR.

ARR is the obvious 'Next Step' for existing XR users and anyone that has the requirement to deploy a Mixed Reality (MR) enabled use case.

Exceeding Design Data Expectations

Theorem’s ARR solution delivers remotely rendered data from the Azure Remote Rendering servers, enabling users to add locally rendered content, by combining streamed data and existing managed assets.

This is all facilitated by the seamless integration between Theorem’s Visualization Pipeline and the ARR Server providing an easy-to-use mechanism to deliver content to the TheoremXR Experiences.

What is Remote Rendering?

Every day we take for granted that on our phones, laptops and PC’s 2D and 3D data is rendered so we can visualize and interact with it. The same requirement is there for Extended Reality (XR) devices too. However, the capacity of XR devices can mean that the device cannot render the volumes of data needed to deliver a good experience for the user. XR devices have the added need to achieve high frame rates to ensure a good experience.

Remote rendering offloads the rendering process to a separate server which might be in the Cloud or an internal network. Those servers normally include high end CPU’s and GPU’s with large amounts of RAM. The rendered data is then streamed to the device- whether that’s a phone or XR device- and displayed. This significantly reduces the processing load on the device.

Without good rendering performance, the quality of the graphics and the richness of effects such as textures and lighting are lost. Remote rendering offloads the need for high performance CPU’s and GPU’s on the XR device, as rendering is done by the rendering server, solving the quality and performance problem.

Why Use Remote Rendering?

In order to deliver good quality graphics and maintain performance (frame rate) on XR devices, the number of polygons, the file size, and the number of individual parts determine whether a 3D object can be displayed with high quality and performance in XR.

One significant issue many XR users face is the limited computing and graphics power of smartphones, tablets and XR headsets. Whilst new devices appear every few months offering better performance, the need to display very large data sets at a high quality is an issue that will remain, as there are physical barriers, as well as the need to reduce the form factor, to bring immersive technologies to the mainstream.

The result is a trade-off between the graphics quality and performance. Like most things the devil is in the detail; the device, the data size, the quality needed, the best frame rate and the use case will all effect the outcome.

How do you get your data into ARR?

Theorem’s Visualization Pipeline provides a fully automated process to simplify and remove details of 3D CAD content so that the XR object is delivered with optimum size and quality to achieve good performance on the users XR device of choice. However, there are use cases where very large data sets cannot be optimised to the volume of polygons needed to maintain quality.

The trade-off between optimising and quality can end up with a model which is unusable. Remote rendering solves that problem, enabling models with tens and hundreds of millions of polygons to be used on XR headsets and mobile devices.

By offloading the high computation needs for rendering large data sets at high quality from the device, to a remote rendering server, solves this problem, enabling lower cost headsets to still deliver great graphics quality and performance to the user.

Catch Up on the Latest TheoremXR News

Demonstration Request

Register now to request your free demonstration of the TheoremXR product suite.

In just a few simple steps, we will be able to validate your request based upon the details you provide.

Note: Please use your company email address for validation purposes as we will not respond to personal email accounts.

-

Complete all of your required details.

-

Let us know which TheoremXR product you are looking to learn more about.

-

If you have a specific use case that you would like to discuss then please let us know.

-

We will contact you to discuss suitable days and times and get everything setup.

-

Please only use your company email address for validation purposes as we will not respond to personal email accounts