We recently sat down with our Consultancy Director Ryan Dugmore, and Sales Director Chris Litchfield to discuss What’s New with Theorem-XR and the latest hot topics surrounding Extended Reality (XR) in engineering.

Watch the full 15-minute video

What is Theorem-XR?

Powered by the Theorem Visualization Pipeline, Theorem-XR enables companies to visualize their 3D CAD data in context at full scale, in Augmented, Mixed and Virtual Reality (collectively known as Extended Reality – XR).

“We’ve developed a suite of applications which are fundamentally based on a server solution – the Visualization Pipeline. This is about optimizing and preparing CAD data for use in XR applications. We understand that our customers have needs for XR devices and we’ve delivered a number of off-the-shelf solutions that we can deliver for customers to use. Similarly, we’ve worked with customers worked with customers who develop their own applications, but want to use the pipeline capability to furnish them” – Ryan Dugmore on Theorem-XR and the Visualization Pipeline

An overview of Theorem-XR and the Theorem Visualization Pipeline

Data and Device Agnostic

Chris Litchfield (CL): With this being such a young industry and devices appearing on almost a monthly basis, where are Theorem in the space of supporting devices?

Ryan Dugmore (RD): “As with our historic CAD Translation products, we’re CAD and device agnostic. We can read any data and push it to the downstream system you want – and we’ve gone for that approach for Theorem-XR as well. We understand that the market is heavily changing and that there are new devices released day-on-day. The way we’ve architected our experiences is to be able to support these new devices quickly as they come to market, and as others fall off. It’s an everchanging market that we are working in, and we have to be prepared to support customers as the devices change.”

CL: Where do you see the benefit of the Pipeline sitting when you’re talking to companies?

RD: “I think everyone’s really on an exploratory session – understanding where VR/MR/AR can give you the best ROI in your business. What the Pipeline does is enables people, at an enterprise level, to optimise and prepare data for usage, no matter their use case. The real benefit of the Pipeline is that it’s robust. We can support systems that come along, prepare data and make it ready for any particular use case that arrives”.

Learn more about the Visualization Pipeline

Optimizing data for XR

CL: Companies quite often start their journey with the device then try and shoehorn the rest of their process into the device. Something that is really overlooked until they come up against the problem, is the optimization process and why you need to be flexible in that approach…

RD: “As you’ve touched on there, the device and the use case defines what optimization is required. Some devices you need to heavily optimize and prepare data so it’s usable. The aim of the Pipeline’s flexibility is to enable you to optimize data for your use case. Or, if you’re streaming data down from a remote rendering service, you can keep a high fidelity model and keep the quality. It’s purely based on the use case, and we work flexibly with the Pipeline to understand what a customer needs and hopefully prepare it based on that.”

Preparing and optimizing data for XR using the Visualization Pipeline

Azure Remote Rendering (ARR) and HoloLens 2

CL: What capabilities have we got surrounding Azure Remote Rendering and what’s new in the HoloLens 2?

RD: “At the recent release, we’ve updated our HoloLens 2 offerings. We’ve now ported our experiences into Azure. What that enables the user to do, is to take much higher volumes of data with much higher quality. If we put it through our Pipeline, it optimizes it, prepares it, pushes it to a Remote Rendering farm, where it sits up in the cloud. Then, when you access it from within our application, it streams that down live to the device.”

Learn how to render high-quality engineering data in Microsoft Hololens 2

“We can work on that collaboratively. Multiple users in a HoloLens or in VR can be working in the same model space, streaming high quality, high volumes of data down. We’re a Microsoft Silver Partner so we’ve worked closely with them to develop this capability, and we’re very proud of what it can give. It’s enabled us to take all of our standard applications, but take out the limitation of processing or rendering everything locally on the device - it’s purely rendered on the cloud. That said, if there’s still a need to pull in a file locally, you can pull that in alongside the rendered data. So, again, it’s about the flexibility of the device and the application to enable you to do the task required.”

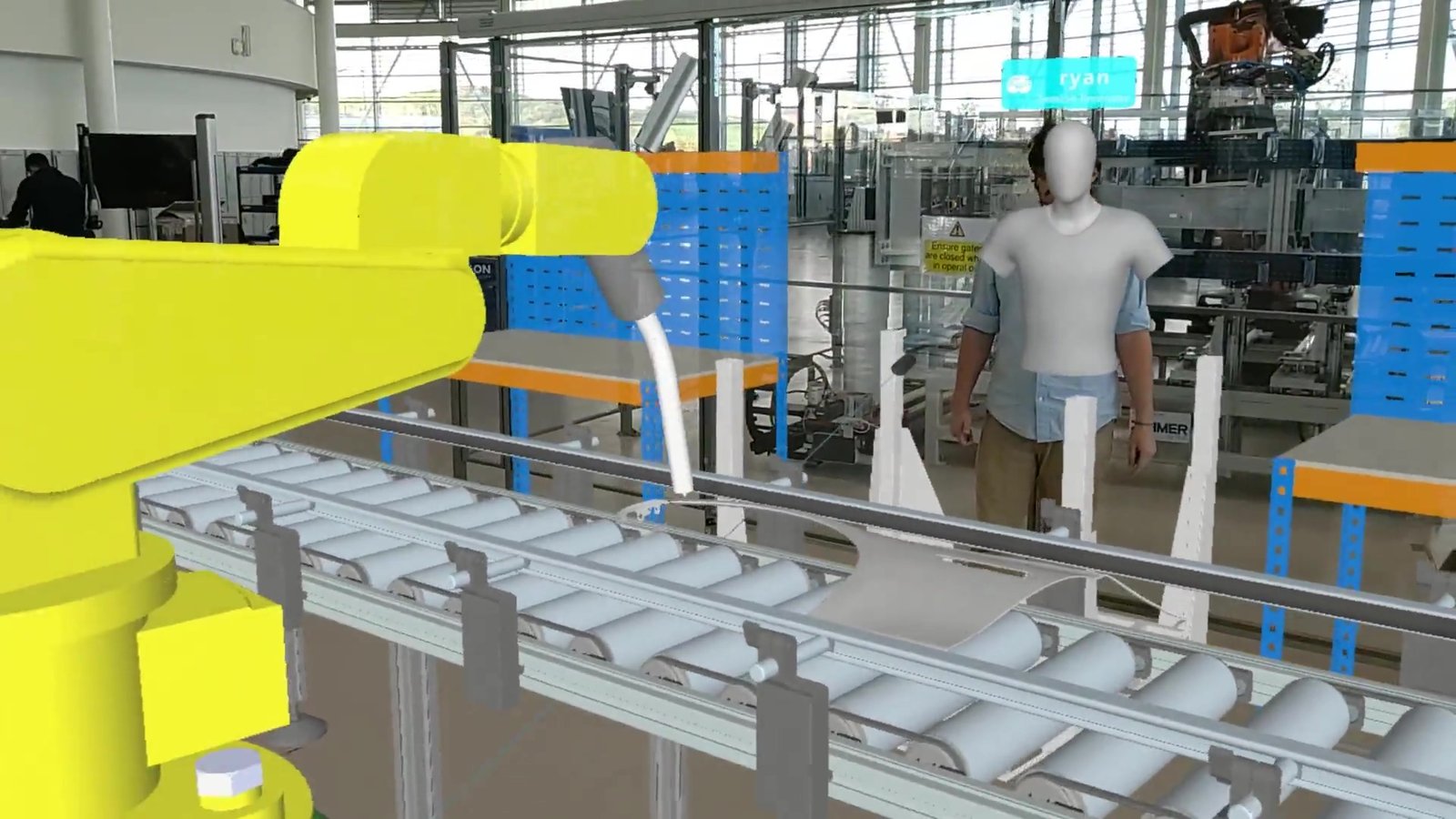

Microsoft HoloLens 2 in Theorem-XR's Factory Layout and Azure Remote Rendering

Device Collaboration

CL: One of the topics that became a ‘hot topic’ early pandemic was collaboration, and the ability to collaborate. What devices can you bring into a collaborative session?

RD: “The nature of the Pipeline is that it controls it all from a central location. The server controls everything and the clients link in, so as long as there is network access, you can link in from any device. VR, MR, and even desktop users can see what’s going on, look at the Design Review that’s happening, apply comments etc. Anything that you can do singularly, you can do in this collaborative environment.”

Collaborative Factory Layout on HoloLens 2 with robot animation

Newly supported VR devices

CL: What’s the latest offering from Theorem regarding device support in VR?

RD: “We attempt to support the devices as they’re released. Obviously, we’ve got a development cycle so we include what we can. Here, we have the VIVE Focus 3. This has got wireless business streaming. Being able to stream to the VR device, you’re no longer tethered.”

“When new devices are released, our architecture enables us to, for not much work, bring that device alongside. We don’t mind what devices you buy. This one’s been released, we’ve coded for it, and it’s now available to use. Similarly, we’ve got others that are being worked that we are porting our software to.

CL: So, it’s based on what’s available and who’s requesting it?

RD: “There are some big players in the market that I’m sure this year will bring out more devices - and we’ve got to be ready to support customers with them.”

HTC VIVE Focus 3 and Guides training in Virtual Reality

Theorem-AR

CL: What are Theorem’s offering in the Augmented Reality (AR) area?

RD: “At this release, we’ve refreshed all of our AR offerings. We’ve essentially brought them up to the latest spec, in similar menus and features that we offer in the other devices. There are two main methods that you can use with the AR offering…

“Firstly, the visual digital twin, which is being able to recognise data. If you have a physical object and you’ve uploaded the digital version to the server, you can snap to the physical object using the tablet app. It’s about being able to view the data in the context of its physical object. We’ve then got the (2) AR Viewer capability, which uses the capabilities provided by Android and Apple with AR Core and AR Kit. It’s about being able to place your objects in recognition of the space that you’re in.”

“We’ve essentially refreshed our AR offering as more people begin to use tablets and phones on the shop floor and ask if they can have this capability available to them. So, we’ve listened to the market and brought AR up to spec at this release.”

Visualizing 3D design data in Augmented Reality

Want to know more?

If you wish to find out more, visit our Theorem-XR pages or get in touch with our team. Make sure to keep up to date with the latest Theorem news by following us on LinkedIn and Twitter.